#

Foreword

As a Chief Information Security Officer for a major research university, it can be frustrating to know that the clues to imminent security breaches are within reach, but not easily accessible. Analysts work tirelessly to construct a timeline of what, how, and when a breach occurred, but by the time they have the information, the damage has already been done. Sensitive data is stolen, important information disclosed, and critical operations have been shut down by threat actors. What's even more disheartening is that the majority of breaches result from simple phishing attacks where users' credentials are compromised. Other breaches involve privilege escalation, where once an attacker has gained access to an environment, they increase their level of access to install malware or exfiltrate data.

Why do common attacks continue to succeed against organizations?

- We revel in the thrill of investigating a major attack, thirsting to comprehend its scope, and engaging in hand-to-hand combat with the attacker as they attempt to evade the containment measures set in place by the response team. The adrenaline rush is palpable as we work to uncover the details and restore order.

- This is an exciting scenario, like the cool scenes in movies where the hero is under immense pressure to save the world with limited time. They swiftly uncover the culprit, decipher their method, and promptly boot them out of the network, reclaiming control of the system making everything right again.

- During security incidents and investigations, teams from various departments and management levels within the organization come together - IT, Legal, Communications, Functional Service Owners, Subject Matter Experts, and Executives - to contain the incident and bring things back to normal. Despite their hard work and efforts, once normalcy is restored, there is often a lack of urgency to switch to prevention mode with the same drive and collaboration. Instead, the organization inadvertently becomes passive, waiting for the next incident to happen. Sadly though, while the wait is on, the data is there trying to tell the story that the next incident has already begun, we just cannot see it yet - we are not looking!

The ODAM (Operationalizing Data Analytics Methodology) promises a framework with repeatable processes for translating and illuminating the story that the data around us is trying to communicate, so that positive actions can be taken to defend our digital assets and capabilities. ODAM promises to take away the common excuses for not leveraging cybersecurity data analytics in the battle to prevent successful attacks and to quickly recover with resilience should a breach occur.

#

Excuse #1

Siloed teams and lack of understanding of the IT environment hampers effective data analytics.

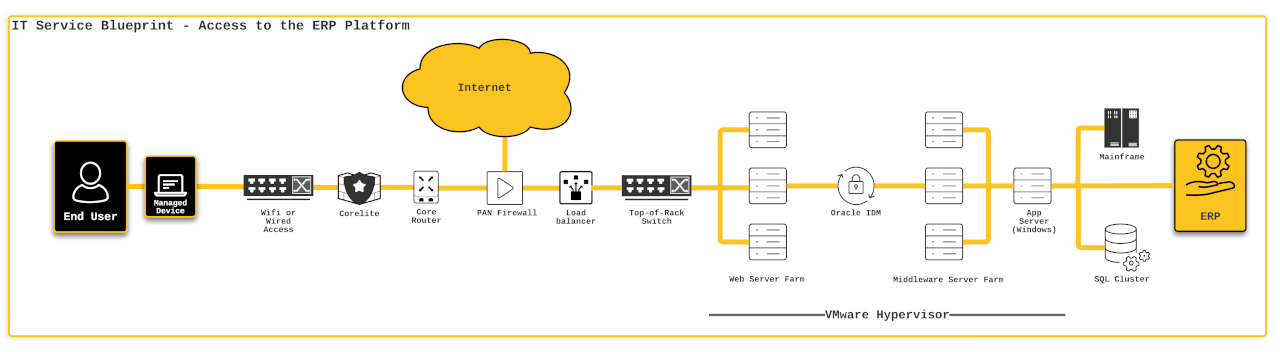

The absence of familiarity with the IT landscape hinders one's ability to distinguish crucial data sources that carry relevance to an operation. Take, for instance, the typical scenario of a central office user accessing the corporate Enterprise Resource Planning (ERP) system. Vital system elements along the connection route offer insights on security vulnerabilities, potential threats, or oncoming assaults. Yet, a comprehensive understanding of these elements is imperative for executing data analytics that effectively narrate the unfolding events and their probable future occurrences.

The ODAM framework advocates for an IT blueprint workshop, where subject matter experts from diverse silos convene to chart the service landscape and pinpoint crucial data sources and their significance. In our ERP scenario (refer to figure below), this involves subject matter specialists from the networking, systems, application development & support, identity & access management, and other relevant teams coming together to delineate the precise connection of a central office user to the corporate ERP via the Internet.

With this newfound insight into the system components involved in connecting a central office user to the corporate ERP system, discussions can be held to determine the relevance and significance of logs and/or transactional data from each component for the purpose of cybersecurity data analytics. Other teams, including those in networking, application development and support, and systems, will come to understand that the benefits of this effort extend beyond cybersecurity and into the realm of general observability. The logs generated by systems, applications, and security tools are not limited to use in cybersecurity data analytics, but can also provide valuable performance information that can be used by these teams to uphold their service level agreements. As a result, discussions about the convergence of network operations centers (NOCs) and security operation centers (SOCs) become even more meaningful.

#

Excuse #2

Talent shortage and cybersecurity staff are not skilled in data analytics.

The ODAM framework follows a systematic approach to map out all system components involved in various common use cases such as a user connecting to the Internet, accessing email, or an administrator connecting to a backend system. By doing so, the team can work together to locate and incorporate the relevant data sources into the cybersecurity analytics environment. Today's leading analytics platforms offer robust capabilities for visualizing large datasets, essential for SOC analysts, CISOs, and other organization leaders. The advantage of the ODAM framework is its clear and repeatable process for operationalizing data analytics for any use case, to increase efficiency and expertise. It's estimated that after completing three to five use cases, the cyber team should be able to continue identifying and operationalizing data analytics for two to three additional use cases per month. Through proper training and management, the challenges of a talent shortage and under-skilled staff in the data analytics field can be overcome.

#

Excuse #3

Data is expensive.

The ODAM framework takes a more strategic approach to developing an ACE (Analytics Center of Excellence) by focusing on use cases and mapping out the relevant system components, rather than collecting as much data as possible, which can often prove to be very costly. Just like in gold mining, not all dirt is pay-dirt, similarly for data mining, not all data will provide value to an organization's decision-making capabilities. The goal is to identify and connect the data sources that will provide the most value to the organization's decision analytics capabilities. This approach helps to reduce costs associated with storing and managing irrelevant data, freeing up resources for more valuable data and activities. By focusing on use cases and identifying the right data sources, organizations can optimize their data collection efforts and avoid wasting resources on over-collecting data that may not provide value.

The ODAM framework provides an alternative method for creating your ACE, guided by use cases that align with your organization's objectives. The framework suggests a structured process for collecting only the data needed to support the analytics required for each use case, as the data required for different use cases are likely to overlap. This helps to minimize data collection by disregarding unnecessary data and focusing on sources that provide significant statistical insights into your ACE's activities. The use-case driven approach results in more predictable budgeting compared to the costly "collect everything" approach and enables regular reassessment of data source requirements as business needs change.

Many security operations centers (SOCs) today function like archaeological digging sites, constantly searching through millions of logs for clues to past incidents to understand the vulnerability that was exploited, how the attacker entered the environment, how to contain the incident, and how to remove the attacker and their payload to get back to normal operations. These are important questions that can be answered through SIEM tools and other security tools in the SOC. However, I aim for a meteorological approach to cybersecurity data analytics, one that allows me to predict future cyber incidents and take preventative measures or to be ready to respond and recover in the event of a successful attack. The ODAM framework rekindles my hope for predictive analytics and forecasting of cybersecurity incidents. While the framework doesn't guarantee this outcome, it provides a roadmap to set up an ACE that, if implemented correctly, could pave the way for this type of analytics.

#

A CISO’s ultimate desire for ODAM?

#

Desire #1

Forecast Risk

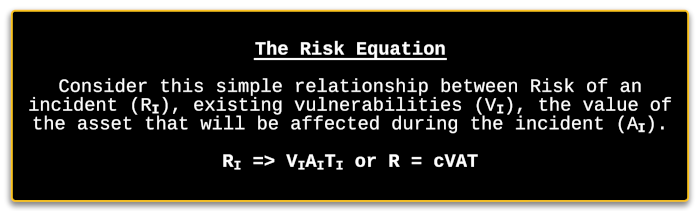

As a CISO, the ultimate goal is to know the risk of the next major incident occurring (RI), to be able to stop it or adequately prepare to respond and recover. Consider this simple relationship between Risk of an incident (RI), existing vulnerabilities (VI), the value of the asset that will be affected during the incident (AI):

From a mathematical perspective, the risk of an incident can be predicted if the threat to an asset is known, vulnerability information is readily accessible, and the value of the asset to the organization is understood. This is the ultimate goal of the ODAM process, to use past and present events, along with sound cyber data analytics, to forecast future incidents. Threat data can be sourced from various internal and external threat intelligence sources, and by normalizing the right data sources and incorporating them into the ACE, we can align with the scenario-based approach proposed by the ODAM framework. Vulnerability data is available from internal and external vulnerability scanners, technology vendors, and UBA (user behavioral analytics) platforms. On the other hand, the information about assets is more complex, given the large number of assets on major organizations' networks, and their multi-dimensional interrelationships and interdependencies.

The asset is of paramount importance, but its complexity surpasses our understanding of threats and vulnerabilities. Threats are an inevitable reality, always lurking and waiting to strike. Nevertheless, their impact can be mitigated by acquiring solid threat intelligence as soon as possible and converting it into actions for the SOC (security operations center). Vulnerabilities, on the other hand, are somewhat within our grasp, as they can be eliminated or addressed by implementing a proper patching, scanning, and management program. Yes, zero-day vulnerabilities exist, but a robust vulnerability management program still positions an organization to handle them better when they arise. If an organization has a mature program in place to address vulnerabilities, adding another one is just a matter of course. However, if a zero-day occurs amid hundreds of unaddressed vulnerabilities, that critical patch becomes harder to install, as several other versions may need to be applied first.

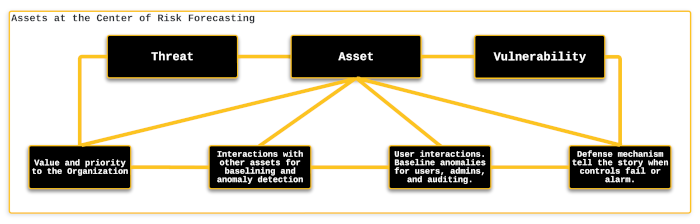

Assets are at the heart of the risk equation and the most complex aspect to grasp. To prioritize and effectively understand the potential for an asset to be compromised, one must have a thorough understanding of not only the value it holds for the organization, but also a multitude of other factors.

When evaluating the risks associated with an asset, it's crucial to consider not only its value to the organization but also a range of other factors that could increase the likelihood of it being compromised. These factors include:

#

Asset Value

The worth of an asset depends on its functions or capabilities. For instance, an ERP or CRM system may hold sensitive information about employees, customers, and suppliers, which could give the organization a competitive advantage. Meanwhile, a nurse's station may be critical in providing patient care during emergencies. To prioritize and effectively weigh the risks, each organization needs to determine the direct value of the asset or the value it enables in other assets.

#

Interactions with other Assets

The ODAM framework focuses on enumerating the interactions between assets in an organization, which helps to establish a baseline of normal interactions and identify anomalies that could indicate ongoing or imminent attacks.

#

User Interactions with Assets

User-asset interactions provide valuable data that can be included in the organization's ACE. This baseline data can be used to detect anomalies and automatically or manually address them through the SOC.

The defense mechanism around an asset is crucial in applying the risk equation. In the event that defense fails, prompt detection is vital to prevent the successful exploitation of automated attacks that are constantly targeting an organization's network. This is where the ODAM framework comes in handy, providing visibility into the status of defense components and enabling prioritization of response actions based on other ongoing events in the environment.

The ultimate objective of the ODAM framework is to simplify the processes involved in building an effective cybersecurity ACE by bringing together the various components of the risk equation in a methodical and incremental manner, making it easier for cybersecurity professionals and other relevant stakeholders to understand and manage the risks they face.

#

Desire #2

Have meaningful and actionable metrics and dashboards

In my opinion, the most impactful way to convey data is through the use of clear and concise visuals and metrics. The ODAM framework aims to simplify the process of transforming data into compelling narratives that can be communicated through the use of visual aids such as graphs, dashboards, metrics, and key performance indicators. The objective is to make sure that the information is easily accessible and understandable by all stakeholders, regardless of their role or level of expertise within the organization.

My ideal scenario would be for the president of an organization to simply glance at the daily dashboard and understand the current state of cybersecurity. If the dashboard displays a green color, it's a good day, and no major incidents are expected in the near future. Conversely, if the dashboard is red, the president knows that a significant breach is imminent and immediate action must be taken. In this scenario, the line worker would also have access to the same dashboard, albeit with varying levels of detail, and work proactively to mitigate the risk. In the end, when the CISO is called to the boardroom, they are able to respond with confidence, saying: "We have a problem, but we know how to fix it, and this is what it will take to do so."

The ODAM framework strives towards standardizing cybersecurity metrics, which is currently hindered by the lack of uniformity. Unlike financial metrics like EPS (earnings per share) or FPE (forward price to earnings ratio), which are well-known and widely accepted performance indicators of a company’s finance, the field of cybersecurity lacks consistency in metrics. The ODAM framework offers a path to solving this problem, by providing a methodical approach to analyzing data, determining relevant performance indicators, and creating common metrics that have the same meaning across organizations. This could allow executives to have a clear understanding of their organization's cybersecurity performance and its resilience against incoming attacks. Furthermore, with the right data and tooling in the ACE, it will pave the way for creating metrics and KPIs that can be easily included in a company's performance statistics and understood by a wider audience, just like financial metrics like EPS or FPE ratios.

#

ODAM, a New Day

CISOs are always searching for ways to grasp the immense amount of data that surrounds us and reveal the story it tells about the robustness of our defenses. However, there is a persistent challenge in balancing the attention and resources given to both incident response, containment, and recovery, and to planning and implementing preventative measures. Despite being aware of the crucial role that data analytics plays in a successful cybersecurity strategy, many organizations still face obstacles such as IT silos, a shortage of skilled personnel, and cost concerns, resulting in a lack of focus and inadequate investment in this area. Presently, many security operation centers are akin to archaeological excavation sites, where analysts sift through millions of logs for days on end to uncover what has already occurred, just as archaeologists uncover historical secrets. CISOs aspire for a meteorological approach to cybersecurity data analytics, where the emphasis shifts to forecasting future cyber incidents in a manner similar to a meteorologist's weather predictions.

The ODAM framework envisions a future where cybersecurity data analytics is no longer a challenge for CISOs, but a solution. It promises to simplify the complex process of bringing together the different components of risk - assets, vulnerabilities, and threats - through a methodical and incremental approach to building an effective cybersecurity ACE. With the ODAM framework, the barriers to leveraging cybersecurity data analytics, such as IT siloes, talent shortages, and cost, can be overcome. The ultimate goal of a CISO is to understand the risk of a potential incident, prevent it and/or be prepared to respond and recover. The framework aims to make this goal a reality by offering a repeatable process for turning data into actionable stories through the use of visual aids, dashboards, metrics, and KPIs. The right data and tools in your ACE should enable the creation of metrics and KPIs that are as widely understood as a company's EPS or FPE ratios, providing a clear and concise representation of the organization's cybersecurity performance.

#

About the Author

Leo Howell, Chief Information Security Officer

Georgia Institute of Technology

Office of Information Technology

756 West Peachtree Street NW

Atlanta, Georgia 30308

LinkedIn: https://www.linkedin.com/in/leohowell/

Leo Howell is a visionary information security leader who is passionate about the "I" in IT as he believes that data leveraged as a strategic asset is a major competitive benefit to any organization. Leo has over 25 years of service in IT and currently serves as the chief information security officer for Georgia Institute of Technology where he practices his balanced approach to cybersecurity - stop the “bad” guys and empower our stakeholders to carry out the organization’s mission. Previously, Leo served as the chief information security officer for the University of Oregon and before that multiple leadership roles in security and audit at NC State University. Leo received his B.Sc. in Computer Science and Electronics from the University of the West Indies, Jamaica, and his MBA from NC State University. Leo is a Certified Information Security Professional (CISSP), Certified Information Systems Auditor (CISA) and a proud member of the international honor society Beta Gamma Sigma.